Third-ever confirmed interstellar object blazing through solar system

Astronomers on Wednesday confirmed the discovery of an interstellar object racing through our solar system—only the third ever spotted, though scientists suspect many more may slip past unnoticed.

The visitor from the stars, designated 3I/Atlas, is likely the largest yet detected, and has been classified as a comet, or cosmic snowball.

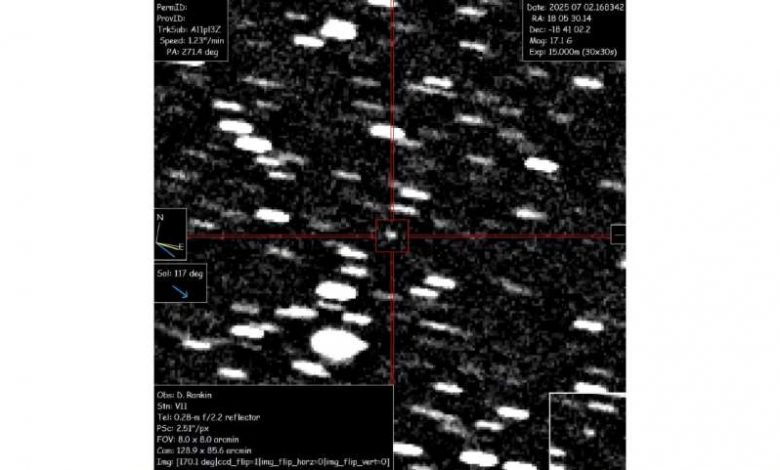

“It looks kind of fuzzy,” Peter Veres, an astronomer with the International Astronomical Union’s Minor Planet Center, which was responsible for the official confirmation, told AFP.

“It seems that there is some gas around it, and I think one or two telescopes reported a very short tail.”

Originally known as A11pl3Z before it was confirmed to be of interstellar origin, the object poses no threat to Earth, said Richard Moissl, head of planetary defense at the European Space Agency.

“It will fly deep through the solar system, passing just inside the orbit of Mars,” but will not hit our neighboring planet, he told AFP.

Excited astronomers are still refining their calculations, but the object appears to be zooming more than 60 kilometers (37 miles) a second.

This would mean it is not bound by the sun’s orbit, unlike objects that remain within the solar system.

Its trajectory also “means it’s not orbiting our star, but coming from interstellar space and flying off to there again,” Moissl said.

“We think that probably these little ice balls get formed associated with star systems,” added Jonathan McDowell, an astronomer at the Harvard-Smithsonian Center for Astrophysics. “And then as another star passes by, tugs on the ice ball, frees it out. It goes rogue, wanders through the galaxy, and now this one is just passing us.”

A Chile based observatory that is part of the NASA-funded ATLAS survey first discovered the object on Tuesday.

Professional and amateur astronomers across the world then searched through past telescope data, tracing its trajectory back to at least June 14.

The object is currently estimated to be roughly 10-20 kilometers wide, Moissl said, which would make it the largest interstellar interloper ever detected. But the object could be smaller if it is made out of ice, which reflects more light.

Veres said the object will continue to brighten as it nears the sun, bending slightly under the pull of gravity, and is expected to reach its closest point—perihelion—on 29 October.

It will then recede and exit the solar system over the next few years.

Our third visitor

This marks only the third time humanity has detected an object entering the solar system from the stars.

The first, ‘Oumuamua, was discovered in 2017. It was so strange that at least one prominent scientist became convinced it was an alien vessel—though this has since been contradicted by further research.

Our second interstellar visitor, 2I/Borisov, was spotted in 2019.

There is no reason to suspect an artificial origin for 3I/Atlas, but teams around the world are now racing to answer key questions about things like its shape, composition, and rotation.

Mark Norris, an astronomer at the UK’s University of Central Lancashire, told AFP that the new object appears to be “moving considerably faster than the other two extrasolar objects that we previously discovered.”

The object is currently roughly around the distance from Jupiter away from Earth, Norris said.

Norris pointed to modeling estimating that there could be as many as 10,000 interstellar objects drifting through the solar system at any given time, though most would be smaller than the newly discovered object.

If true, this suggests that the newly online Vera C. Rubin Observatory in Chile could soon be finding these dim interstellar visitors every month, Norris said.

Moissl said it is not feasible to send a mission into space to intercept the new object.

Still, these visitors offer scientists a rare chance to study something outside of our solar system.

For example, if we detected precursors of life such as amino acids on such an object, it would give us “a lot more confidence that the conditions for life exist in other star systems,” Norris said.